Final Projects Overview

These projects explore two fascinating areas of computational photography:

- Project 1: Image Quilting - A technique for texture synthesis and transfer using patch-based sampling and optimization

- Project 2: Light Field Camera - Implementation of computational refocusing and aperture adjustment using light field data

Project 1: Image Quilting

The Image Quilting project implements the algorithm described in the SIGGRAPH 2001 paper by Efros and Freeman for texture synthesis and transfer. The core objectives include:

- Creating larger textures from small samples through patch-based synthesis

- Implementing overlapping patch selection with error minimization

- Using dynamic programming for optimal seam finding

- Achieving texture transfer while preserving target image structure

This implementation progresses through increasingly sophisticated methods, from basic random sampling to advanced texture transfer with seam optimization.

Random Sampling for Texture Synthesis

The first step in image quilting involves randomly sampling patches from a source texture to create a larger texture. This implementation:

- Takes square patches of specified size from the input texture

- Places them in a grid pattern to create the output image

- Handles edge cases where patches extend beyond the desired output size

Overlapping Patches

To improve the quality of synthesized textures, we implement overlapping patches with error minimization:

- Computes SSD (Sum of Squared Differences) in overlap regions

- Selects patches that minimize error in overlapping areas

- Uses tolerance parameter to allow for variation in patch selection

Seam Finding

The seam finding algorithm uses dynamic programming to find optimal boundaries between overlapping patches, significantly reducing visible artifacts:

- Computes squared RGB differences between overlapping regions to create error surfaces

- Uses dynamic programming to find minimum-cost paths through error surfaces

- Handles both horizontal and vertical seams through matrix transposition

- Creates composite masks by intersecting individual seam masks using logical AND operations

- Applies the masks to smoothly blend overlapping patches at optimal boundaries

Texture Transfer

The texture transfer implementation combines texture synthesis with a guidance image:

- Balances texture similarity with correspondence to guidance image

- Uses alpha parameter to control the strength of guidance

- Applies seam finding for smooth patch transitions

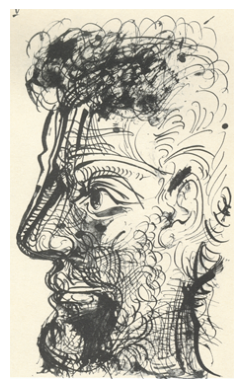

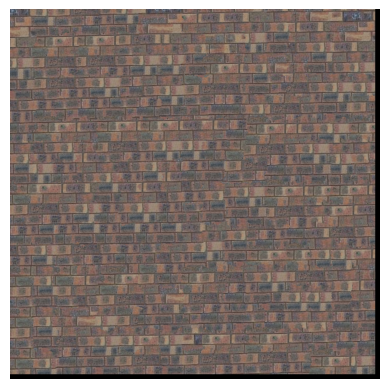

Feynman Portrait with Sketch Texture (α = 0.5)

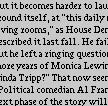

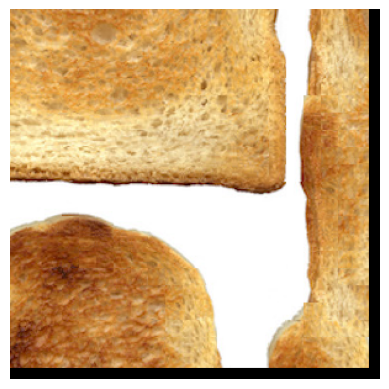

Feynman Portrait with Toast Texture (α = 0.4)

Project 2: Light Field Camera

The Light Field Camera project explores computational photography techniques based on the work by Ng et al., demonstrating how multiple images captured across a plane enable sophisticated post-capture effects. Key aspects include:

- Processing light field data from multiple camera positions

- Implementing depth-based refocusing through image shifting and averaging

- Simulating variable aperture effects using selective image sampling

The implementation uses real light field data to demonstrate how simple operations like shifting and averaging can achieve complex photographic effects traditionally only possible during capture.

Depth Refocusing

The depth refocusing implementation processes a light field dataset consisting of multiple images captured from a 17x17 camera array:

- Loads and organizes rectified images with their corresponding spatial coordinates

- Implements shift-and-add algorithm where images are:

- Shifted relative to a center reference image (position 8,8)

- Aligned based on a scale factor that determines focal depth

- Averaged to create the final refocused image

- Explores scale factors from 0.1 (far focus) to -0.5 (near focus)

- Handles edge cases with proper image padding and boundary management

- Creates smooth focus transitions through 100 interpolated frames

Depth Refocusing Animation (100 frames, scale factor 0.1 to -0.5)

Aperture Adjustment

The aperture adjustment implementation simulates variable aperture effects by selectively sampling the light field:

- Centers sampling around the reference camera (position 8,8)

- Implements circular aperture simulation by:

- Selecting images within specified radius (0-8) from center

- Applying depth refocusing (scale factor -0.2) to selected subset

- Averaging contributions from all cameras within aperture

- Demonstrates depth of field effects:

- Small radius (0-2): Deep depth of field, sharper overall

- Large radius (6-8): Shallow depth of field, stronger bokeh

Aperture Adjustment Animation (Radius 0-8, 1 frame per second)